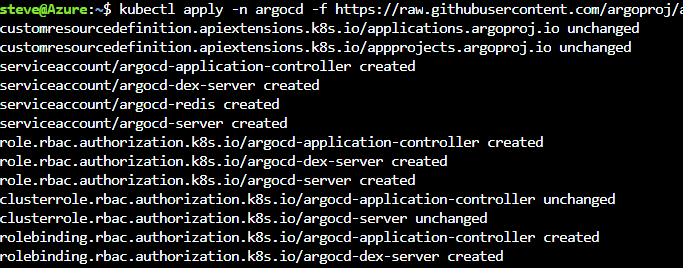

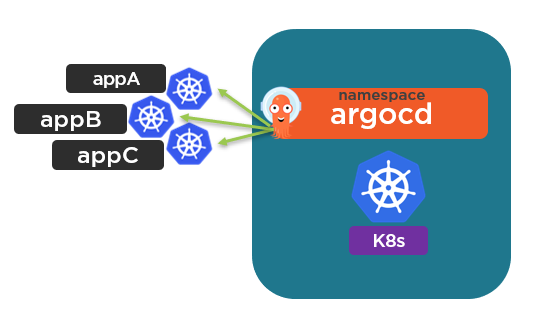

Argo CD is a GitOps operator and the goal of it is to be able to deploy apps to Kubernetes. In the majority of cases, we want to use Argo CD to deploy apps to many clusters.

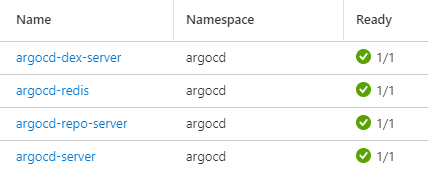

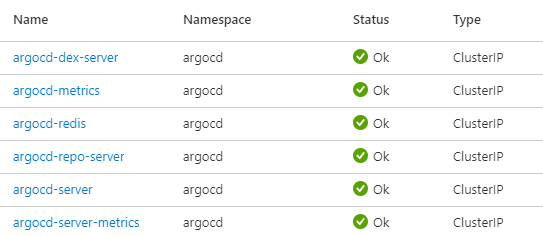

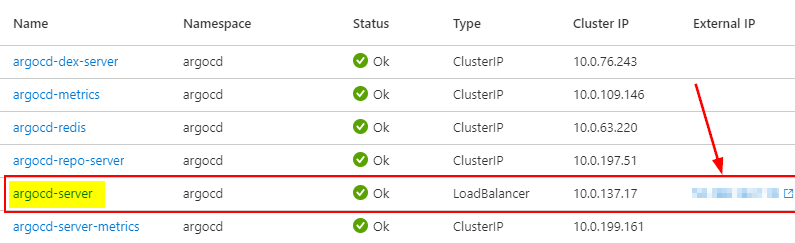

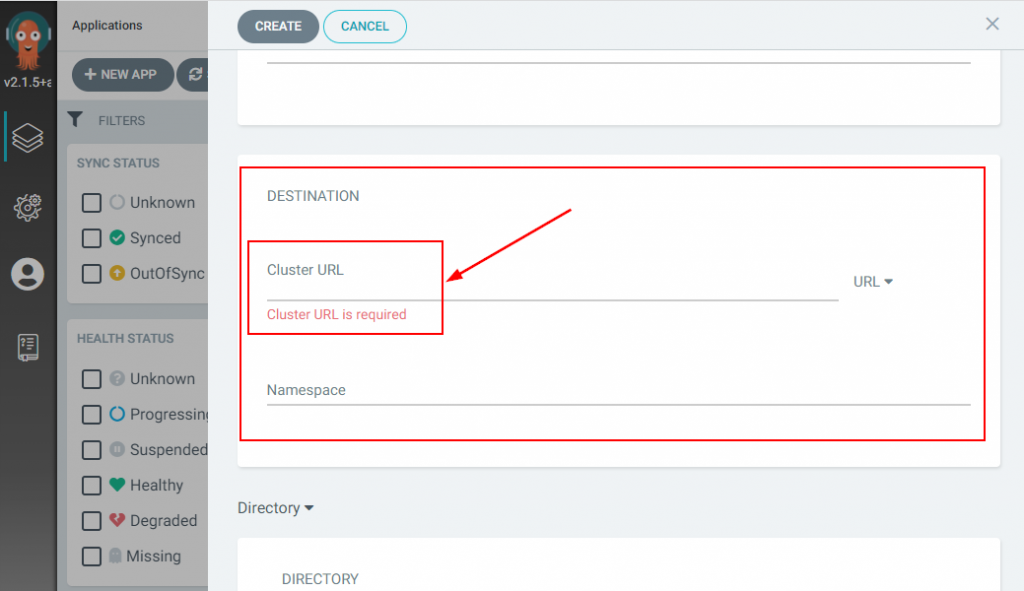

Argo CD itself is deployed as a set of pods on a Kubernetes cluster. By default with an Argo CD deployment, the cluster it is running on is set as “in-cluster” (https://kubernetes.default.svc). When apps are configured for deployment a Kubernetes Cluster under Destination is required. They can be deployed to either the “in-cluster” K8s cluster or an external K8s cluster.

In order to deploy apps to an external Kubernetes cluster, you will need to register an external K8s cluster with Argo CD.

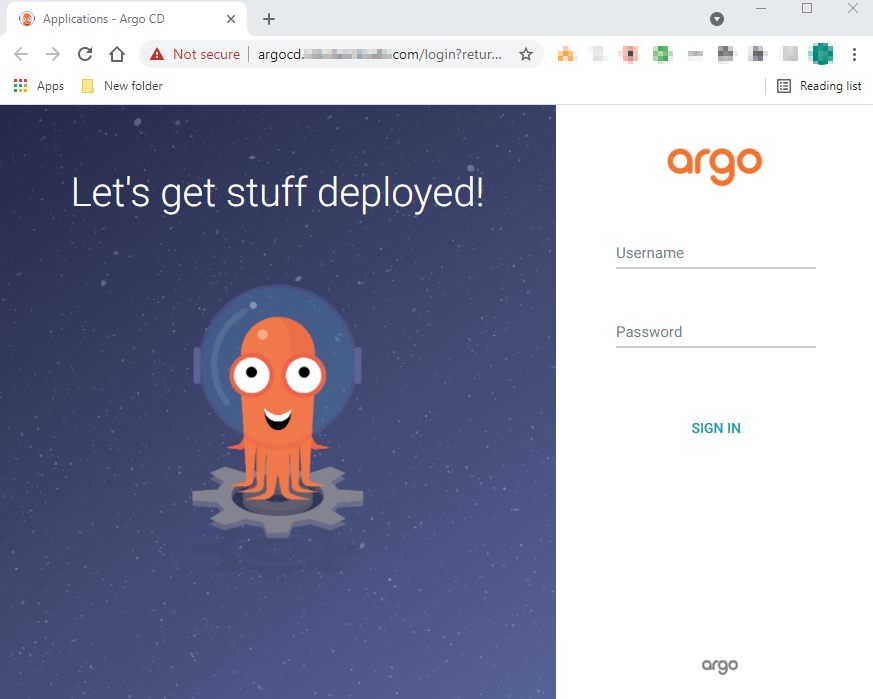

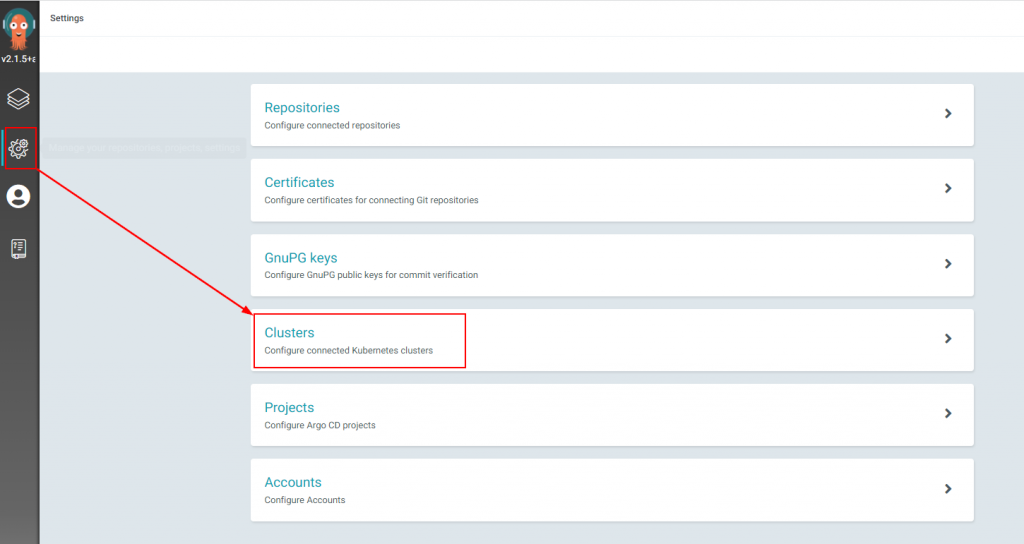

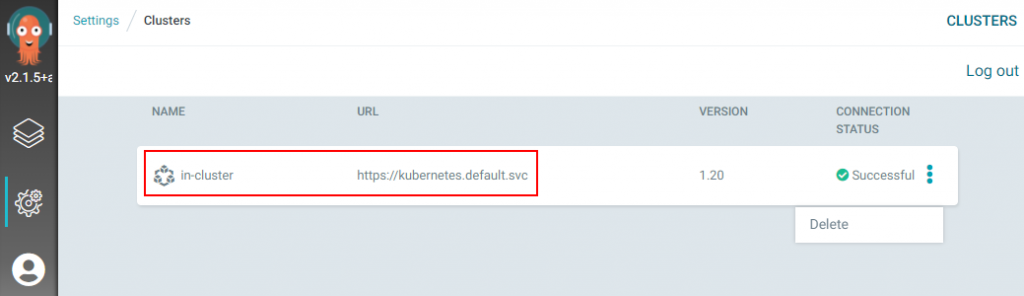

If you want to see the clusters you have registered with your Argo CD one way is through the web UI. Once you log in navigate to Settings and then Clusters to see them.

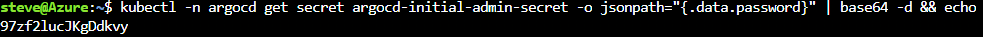

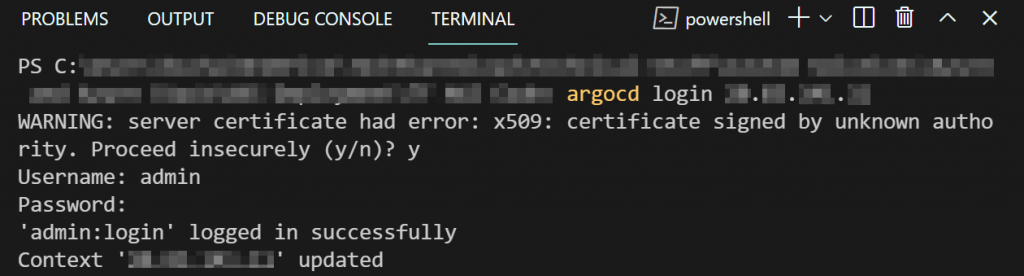

You can also see the clusters you have in the Argo CD CLI. To use the Argo CD CLI you need to log into the Argo CD API Server as shown in the following screenshot.

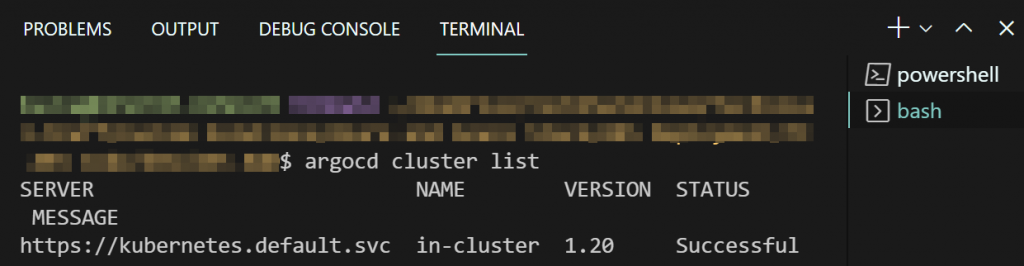

To see what clusters are registered from the CLI you can run

argocd cluster list

You will notice that you will only see the In-Cluster K8s cluster until you add an external one. Also, note that you are not able to register a new K8 cluster in the Argo CD web UI. You can only register a new K8s cluster from the Argo CD CLI. Within the Argo CD web UI you can delete the default in-cluster K8s cluster. This is not recommended.

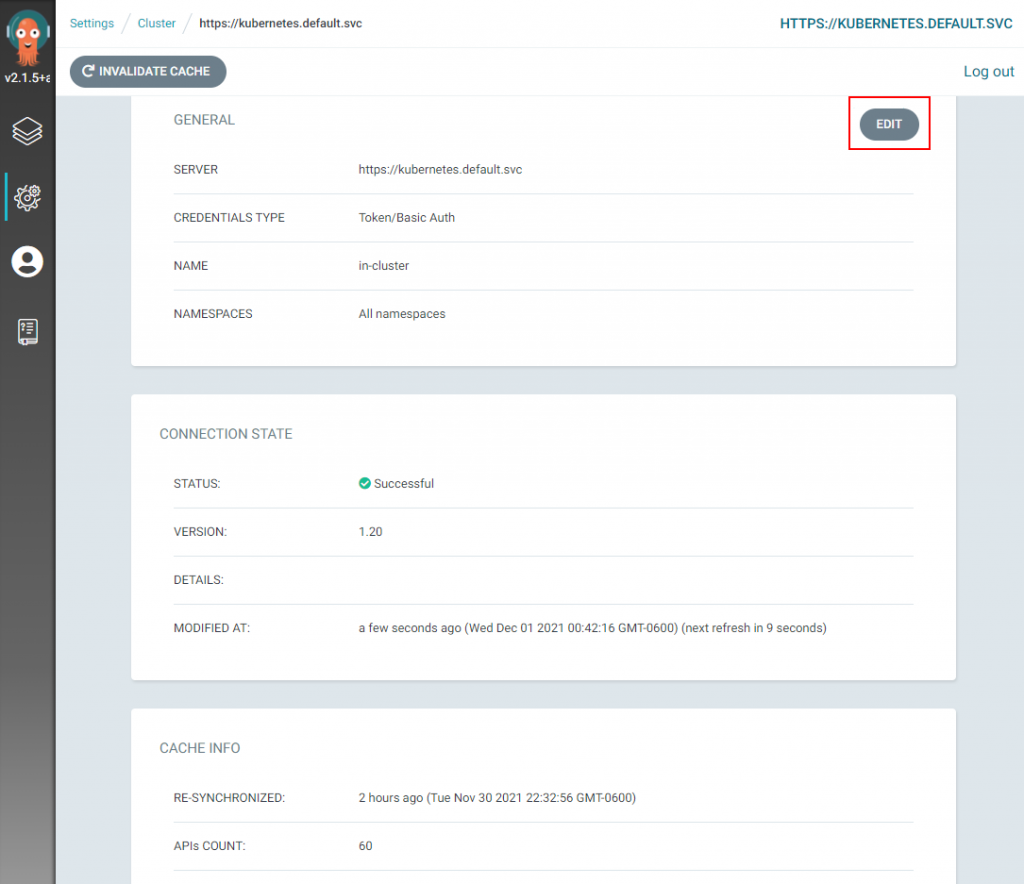

If you click on the In-Cluster K8s cluster you can modify some settings of the in-cluster K8s cluster in the Argo CD web UI such as the name of it and its namespace. Not useful when you want to have more control over the settings around the K8s cluster you will be deploying apps to.

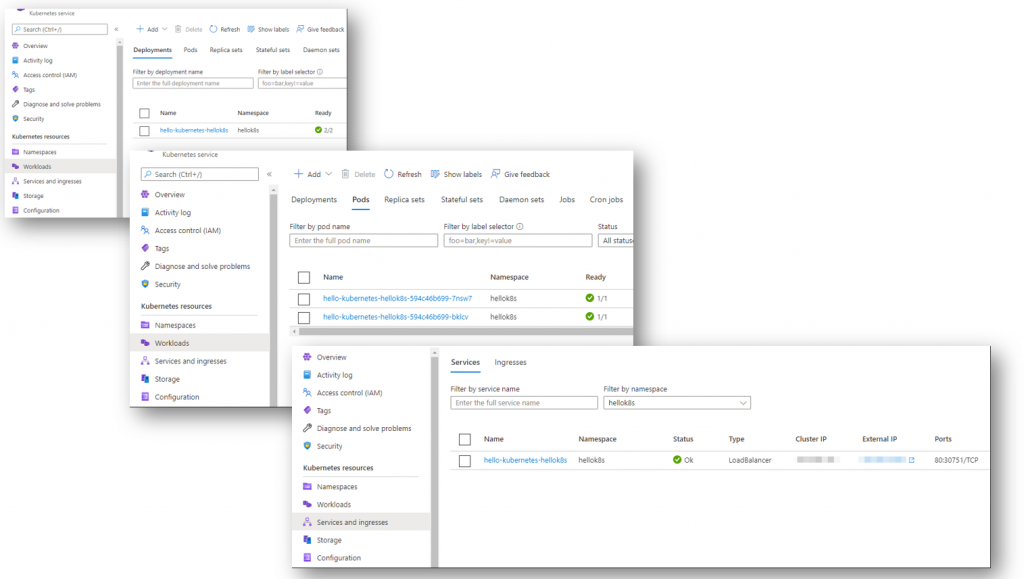

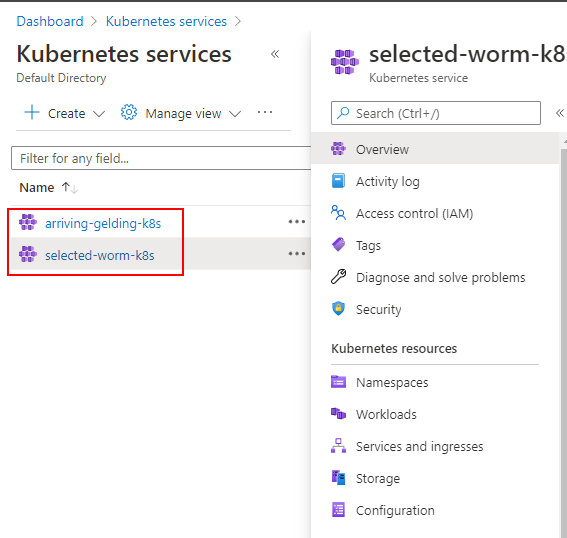

In my example, my Azure subscription has two AKS clusters. You can see this in the following screenshot. The arriving-gelding-k8s cluster is my In-Cluster object in Argo CD. The selected-worm-k8s is not my In-Cluster so I want to add this one to my Argo CD.

To add the new external cluster run use the following steps.

Step 1: Add your target K8s cluster to ArgoCD via the context in your kubectl config.

-For AKS you can simply log into your Azure subscription from VS Code on your computer and then run

az aks get-credentials –resource-group RGNAME –name AKSCLUSTERNAME

This will add the context for your AKS cluster to your kubeconfig file.

-For the process on your setup refer to the following link as it may differ: https://kubernetes.io/docs/tasks/access-application-cluster/configure-access-multiple-clusters

Step 2: List the K8 cluster contexts in your current kubeconfig file to ensure your target cluster has been added. Do this by locally running:

kubectl config get-contexts -o name

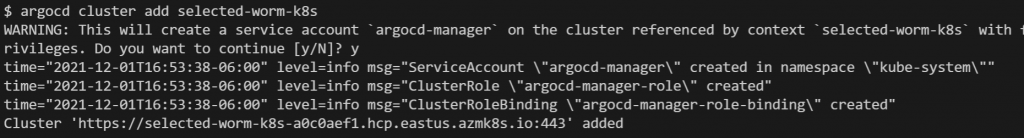

Step 3: Install a Service Account (argocd-manager), into the kube-system namespace of your kubeconfig file context:

argocd cluster add CONTEXTNAME

It will look like this:

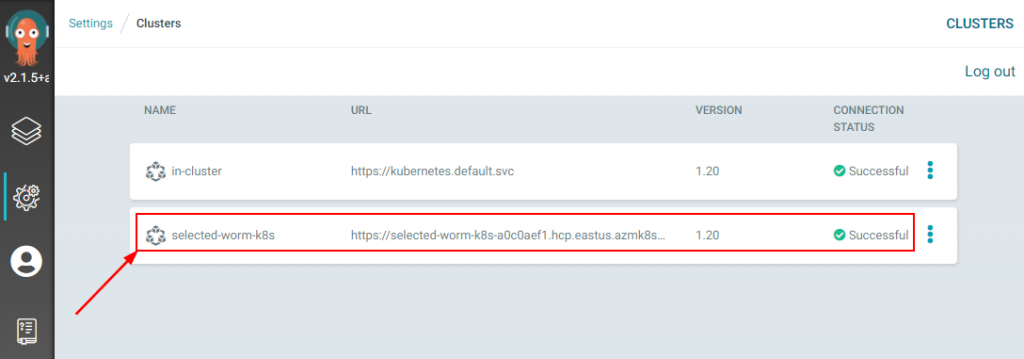

After completing the previous steps you can run argocd cluster list again or go into the portal. You will see your new cluster added.

That wraps up this blog post. Now you should be able to deploy to more than just your In-Cluster Kubernetes cluster. Check back soon for more posts on Argo CD, GitOps, Kubernetes, and Azure topics.