I am happy to share a new episode of Azure Friday. It was an honor to appear along side Senior Product Manager Rajat Shrivastava in this episode to talk about AKS Backup. I this episode we joined Scott Hanselman to explore the functionality of AKS backup in safeguarding containerized apps and their data on AKS.

Backup is frequently overlooked, only gaining significance when a failure necessitates recovery. In the realm of Containers and Kubernetes, it is often perceived as unnecessary. However, the reality is that backups are essential even for containerized environments. Microsoft has introduced a backup solution for Azure Kubernetes Service (AKS) and its workloads, leveraging Azure Backup.

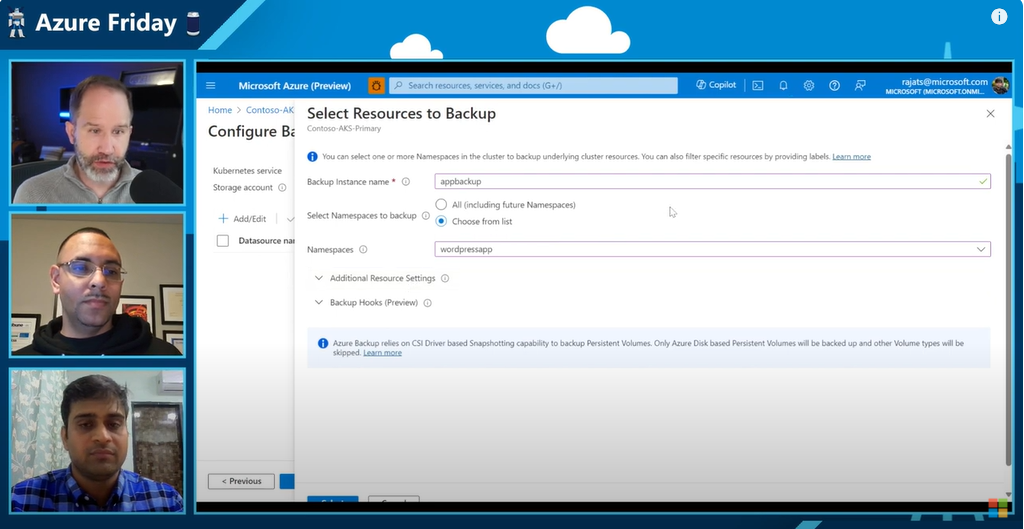

In this episode we dove into the importance of backing up containers, even when they are predominantly stateless. The episode sheds light on why safeguarding containers is crucial and provides insights into the workings of AKS backup in ensuring the protection of workloads running on AKS.

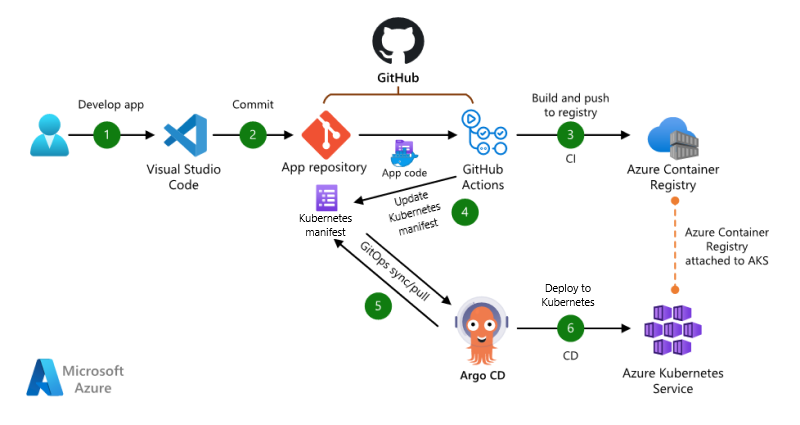

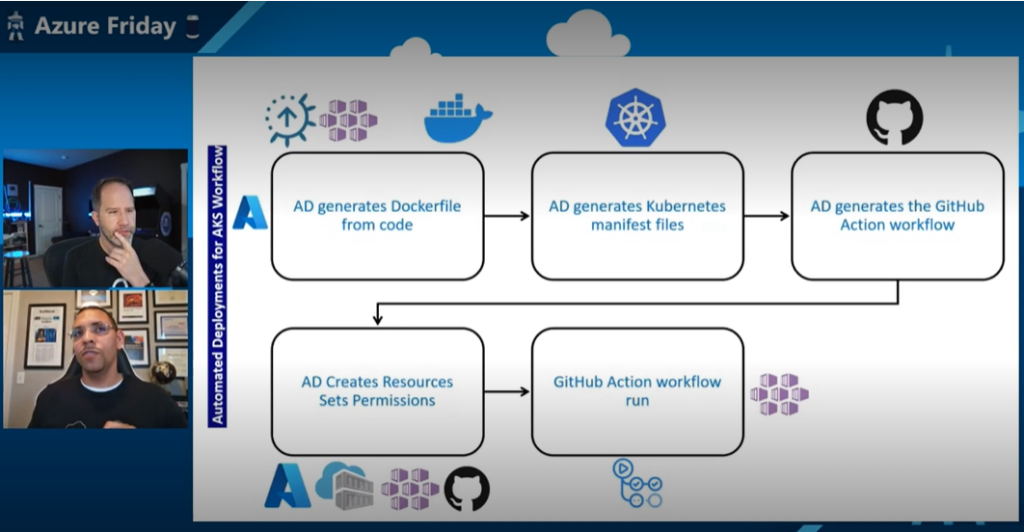

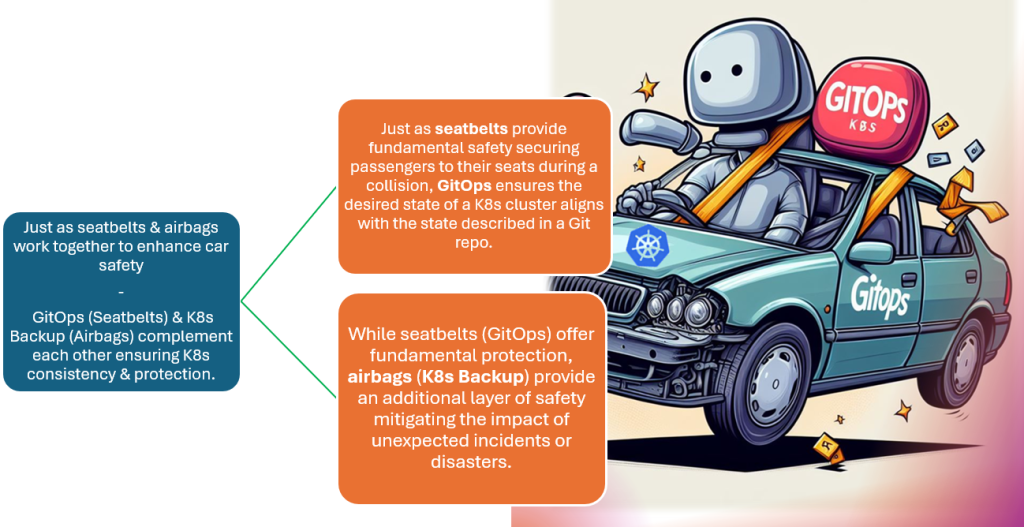

In the episode we also explore questions you may have about backing up K8s and we dive into demos showing how to protect AKS with AKS backup and how to do a restore. We even took time to answer this common question “Do I really need to backup my K8s cluster if I am running stateless apps & have everything in code i.e. IaC, CI/CD, or GitOps?”. The answer is yes. In fact one should think of it this way: “GitOps & K8s Backup are like Seatbelts & Airbags”. Here is a graphic to break this down further:

You can check out the episode here:

Addtional resources on AKS and AKS Backup:

■ Backup for AKS: Cloud native, Enterprise ready, Kubernetes aware backup – https://aka.ms/azfr/766/01

■ What is Azure Kubernetes Service backup? – https://aka.ms/azfr/766/02

■ Cluster extensions – https://aka.ms/azfr/766/03

■ Prerequisites for Azure Kubernetes Service backup using Azure Backup – https://aka.ms/azfr/766/04

■ Create a Pay-as-You-Go account (Azure) – https://aka.ms/azfr/766/payg

■ Create a free account (Azure) – https://aka.ms/azfr/766/free