I’m thrilled to announce that I’ll be delivering my inaugural keynote at Minnebar 18, an annual technology conference hosted in Minneapolis by the well known tech organization, Minnestar. My talk will explore the power of investment in shaping our collective future.

As an (un)conference, Minnebar provides a dynamic platform for tech enthusiasts to converge and share insights. Visit the site for full information on Minnebar 18: https://minnestar.org/ultimate-guide-to-minnebar18

Taking place on April 20, 2024, at Best Buy’s Corporate Campus, this Minnebar event will be an event to remember. I’m particularly honored to lead Session 0, also known as the keynote, marking not only my debut at Minnebar but also my first time engaging this vibrant community in the first session of the day.

My talk is titled “Let’s Invest: Building Strong Community and Empowering Our Future” here is the description of my talk: “

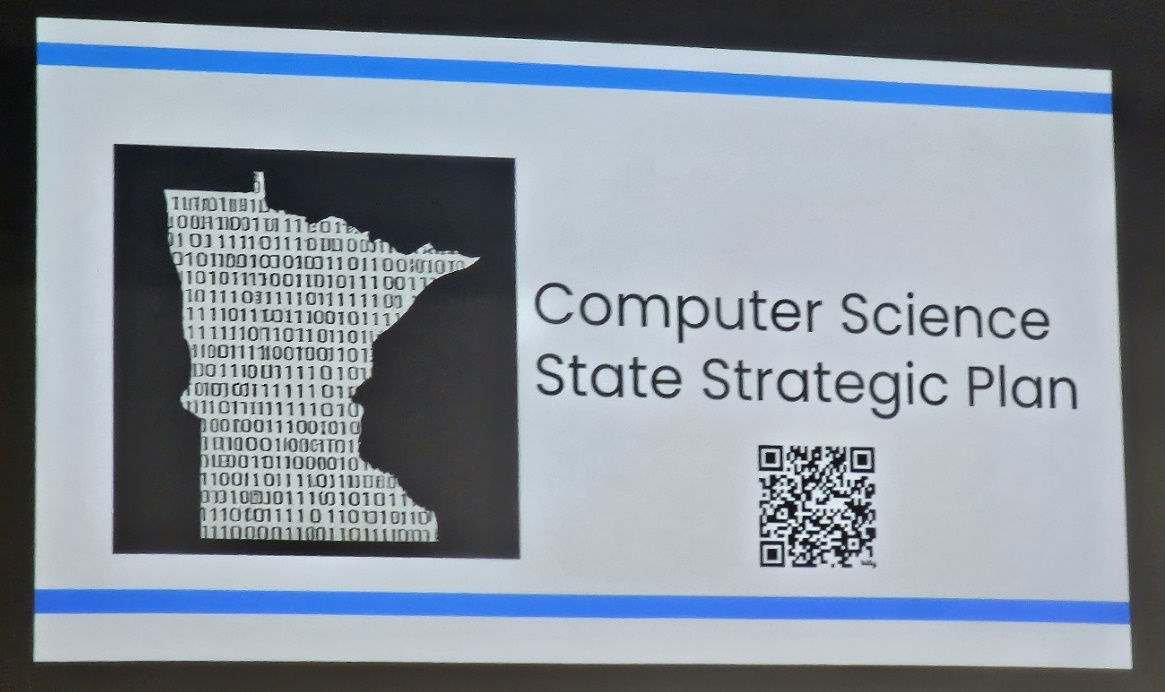

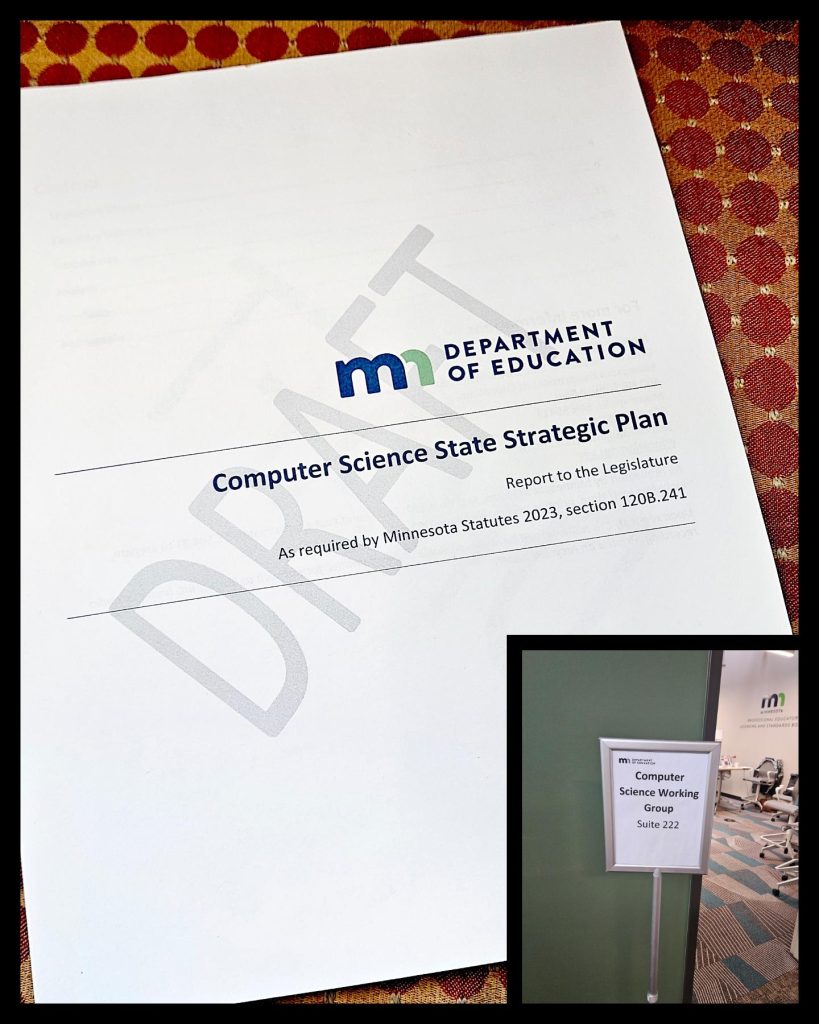

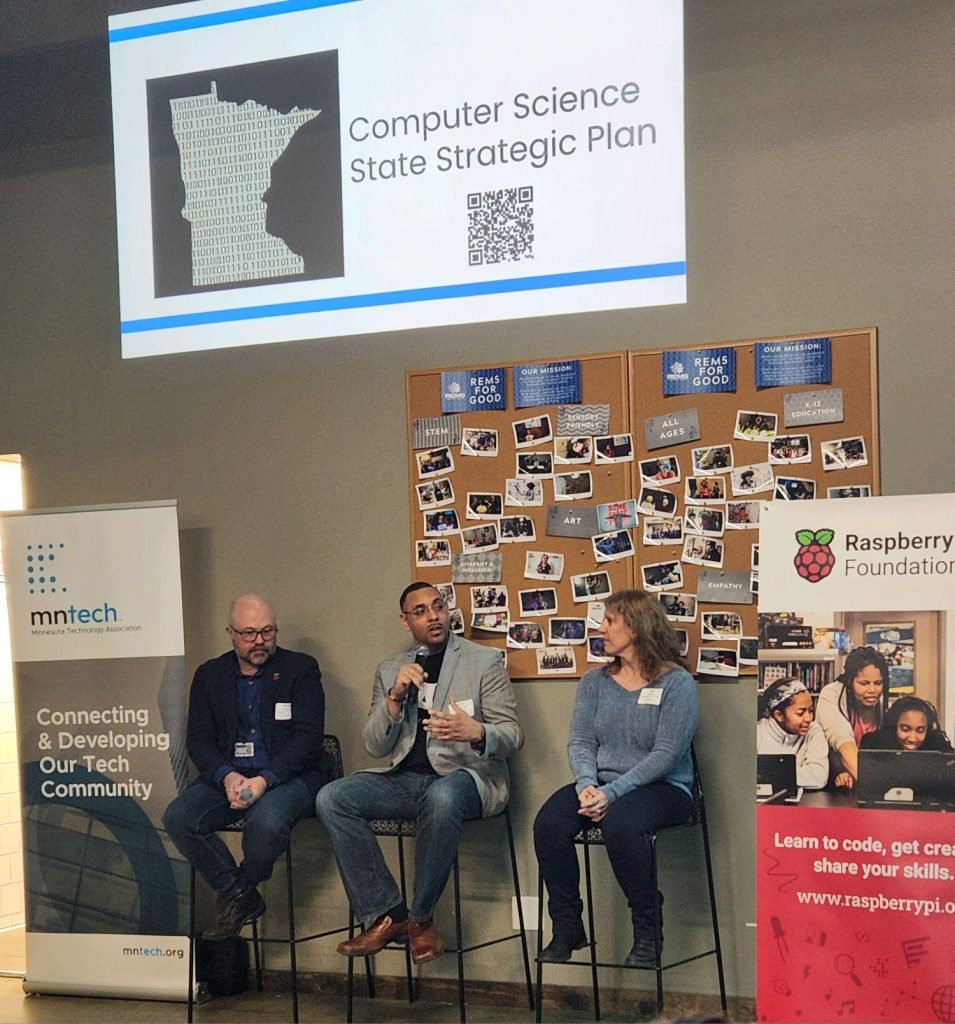

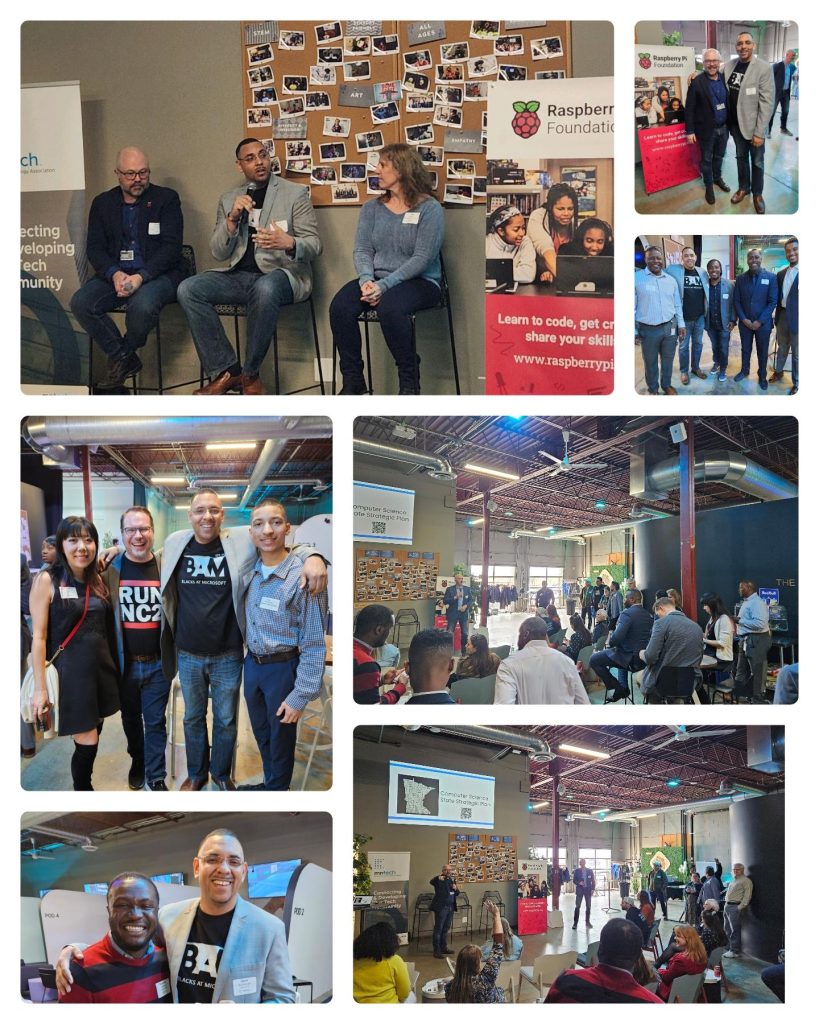

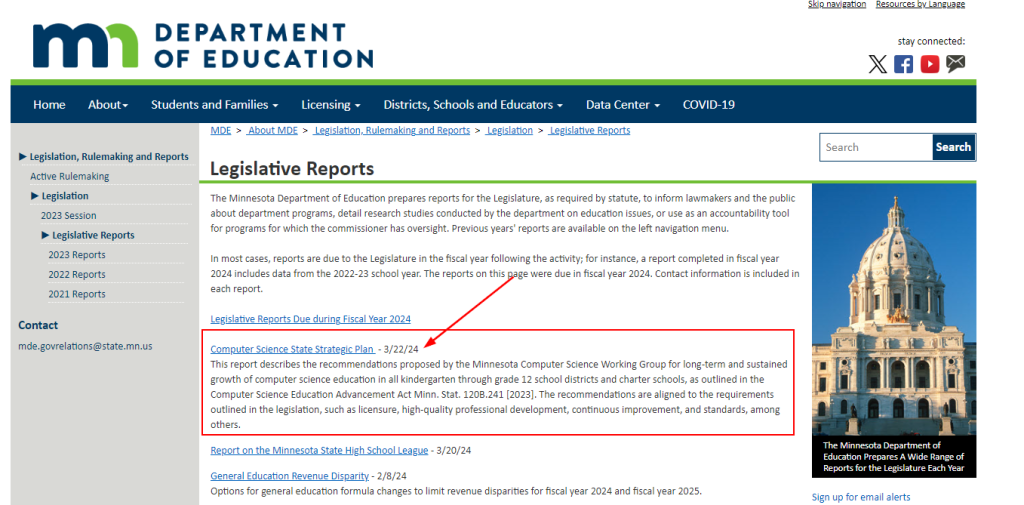

Explore the power of investment in shaping our collective future in this keynote talk. Hear about the speakers’ journey and the investments others made contributing to his success. Dive into the Minnesota computer science plan and its role in fostering youth talent, alongside the broader theme of community investment. Discover how organizations in the Twin Cities’ tech space are actively supporting individuals at all levels, from youth initiatives to professional development programs, and more.

Join us to uncover actionable strategies for investing in your own success and the success of others, strengthening our community fabric. Together, let’s ignite a collective commitment to investing in each other and our communities, forging a path for strong communities and empowered future for all.”

Here is sampling of some of the sessions you will see at Minnebar:

- Attending🐍 Battlesnake: Learn, Build, Duel! 🐍by Misha Hawthorn

- AttendingFuture-proofed product design from 20 years of NPS lessons learnedby Hugh Murphy

- AttendingAmazon Primerby Kisha Delain and Levi McCormick

- AttendingThe Dark Arcana of Chrome DevToolsby Victoria Perkins

- AttendingMinnesota Digital Servicesby Mathias Rechtzigel and Liz Tupper

- Attending🤖 What’s a Transformer? Understanding the Innovation That Changed Artificial Intelligenceby Collin Flynn

You can check out other sessions here:

https://sessions.minnestar.org/home

Mark your calendars for April 20, 2024, and join us at Minnebar 18—it’s free and open to all who share an interest. I look forward to connecting. See you there! Register here:

https://www.eventbrite.com/e/minnebar18-tickets-863048881437